CNNs and Equivariance - Part 1/2

CNNs are famously equivariant with respect to translation. This means that translating the input to a convolutional layer will result in translating the output. Arguably, this property played a pivotal role in the advent of deep learning, reducing the number of trainable parameters by orders of magnitude. So, given the importance of equivariance in deep learning, wouldn’t it be nice to design a convolutional layer which is equivariant not only with respect to translation but also, for instance, rotation and mirroring?

Taco Cohen and Max Welling’s 2016 paper Group Equivariant Convolutional Networks pioneered a group theoretical approach to extending translation equivariance of CNNs to further symmetry classes. The paper has an important role in the literature as it arguably kicked off an entire stream of work. However, it is, as attested by many, not easy to understand, especially if you aren’t familiar with the relevant mathematical background. In this series of two blog posts, we aim to make this topic a bit more approachable.

In this post, we start by introducing the concept of equivariance, from both a practical and a mathematical point of view. We explain why it plays such a crucial role in deep learning, how it is enforced in the case of translation equivariance, and how it can be enforced more generally. In the second part, we dive into Taco Cohen and Max Welling’s paper, which proposes a concrete approach for extending equivariance in CNNs to rotations and mirroring.

Intro

In machine learning, we’re often concerned with the flexibility of our models. We’d like to know that the model we choose is actually capable of the task that we want it to perform. For instance, the universal approximation theorem for neural networks gives us confidence that neural networks can approximate a very broad class of functions to any desired degree of accuracy1.

There is a downside to complete flexibility. While we know that we can learn our target function, there’s also a whole universe of incorrect functions that look exactly the same on our training data. If we’re totally flexible, our model could learn any one of these functions, and once we move away from the training data, we might fail to generalise. For this reason, it’s often desirable to restrict our flexibility a little. If we can identify a smaller class of functions which still contains our target, and build an architecture which only learns functions in this class, we rule out many wrong answers while still allowing our model enough flexibility to learn the right answer. This might make a big difference to the necessary amount of training data or, given the same training data, make the difference between a highly successful model and one that performs very poorly.

A famous success story for this reduction in flexibility is the CNN. The convolutional structure of the CNN encodes the translation symmetries of image data. We’ve given up total flexibility and can no longer learn functions which lack translation symmetry, but we don’t lose any useful flexibility, because we know that our target function does have translation symmetry. In return, we have a much smaller universe of functions to explore, and we’ve reduced the number of parameters that we need to train.

Of course, images are not the only kind of data with useful structure, and translation symmetries are not the only kind of symmetry. The CNN is extremely successful on the right kind of data, but can we do something similar to encode other useful structure in other kinds of data? Cohen and Welling’s work gives us a general framework for doing just this.

We’ve been waving our hands a lot in this introduction talking about “structure” and “symmetries”, and the first thing we’ll need to do to understand Cohen and Welling is to say precisely what we mean here. The crucial concept we need to make this precise is equivariance.

What is equivariance?

There’s more than one way, of course, of taking the fuzzy idea of “encoding structure in a model” and making it precise. Here’s one way of starting with the fuzzy intuition and getting gradually more precise – this particular train of thought is going to lead us to equivariance.

- One aspect of the structure in our data is that it satisfies some symmetries. We should build a model which incorporates our knowledge of these symmetries.

- Our model should preserve the symmetries of our data. That is, the outputs of the model should retain the symmetries of the inputs.

- For any symmetry operation $\sigma$, applying $\sigma$ to our input and then passing it through the model should be the same as applying $\sigma$ to the output of the model.

If we write $f$ for the function learned by our model, this final statement is pretty clearly something that we can straightforwardly write as an equation:

\[f\big(\sigma (x)\big) = \sigma \big(f(x)\big)\]If this equation holds, we say that $f$ is equivariant with respect to $\sigma$. If it holds for every $\sigma$ in some collection $S$ of symmetry operations, we say that $f$ is equivariant with respect to $S$.

Making the above fully precise requires some mathematical machinery (specifically, group theory2), but for now, we’ll take this as our definition of equivariance. In particular, the full definition of equivariance doesn’t require the function $\sigma$ applied to the output to be identical to the one applied to the input. However, to keep things from getting too complex, it is helpful to assume that they are identical. Moreover, we will later see that Group Equivariant Convolutional Networks fulfil this stricter definition of equivariance3.

Our definition of equivariance looks a little like part of the definition of linearity. With linearity, we can do scalar multiplication either inside or outside the function. With equivariance, we can do symmetry operations either inside or outside the function. This property of linear functions can actually be stated as “linear functions are equivariant with respect to scalar multiplication”. So even though equivariance may be an unfamiliar concept, you’ve already met it in disguise if you’re familiar with linear functions!

To get a more concrete grasp on equivariance, let’s think about CNNs again. The following video shows the equivariance of CNNs with respect to translation. A shift to the input image directly corresponds to a shift of the output features. (The full video is available here).

It is also helpful to relate the concept of equivariance to the perhaps more familiar concept of invariance. $f$ is invariant if its output doesn’t change upon applying $\sigma$ to the input. Hence, the above equation reduces to:

\[f\big(\sigma(x)\big) = f(x)\]If a layer is an equivariant embedding of the input with respect to a certain symmetry, it can always be turned into an invariant embedding in a later layer. Whether this can be done in a straightforward and meaningful manner depends on how the equivariance is implemented. Famous examples where this works are networks where multiple convolutional layers are followed by a global average pooling layer (GAP). In this case, everything until the GAP layer is translation equivariant, but the output of the GAP layer (and of the entire network) is invariant with respect to translations of the input. We will later see that this principle can also be applied to group equivariant convolutional layers.

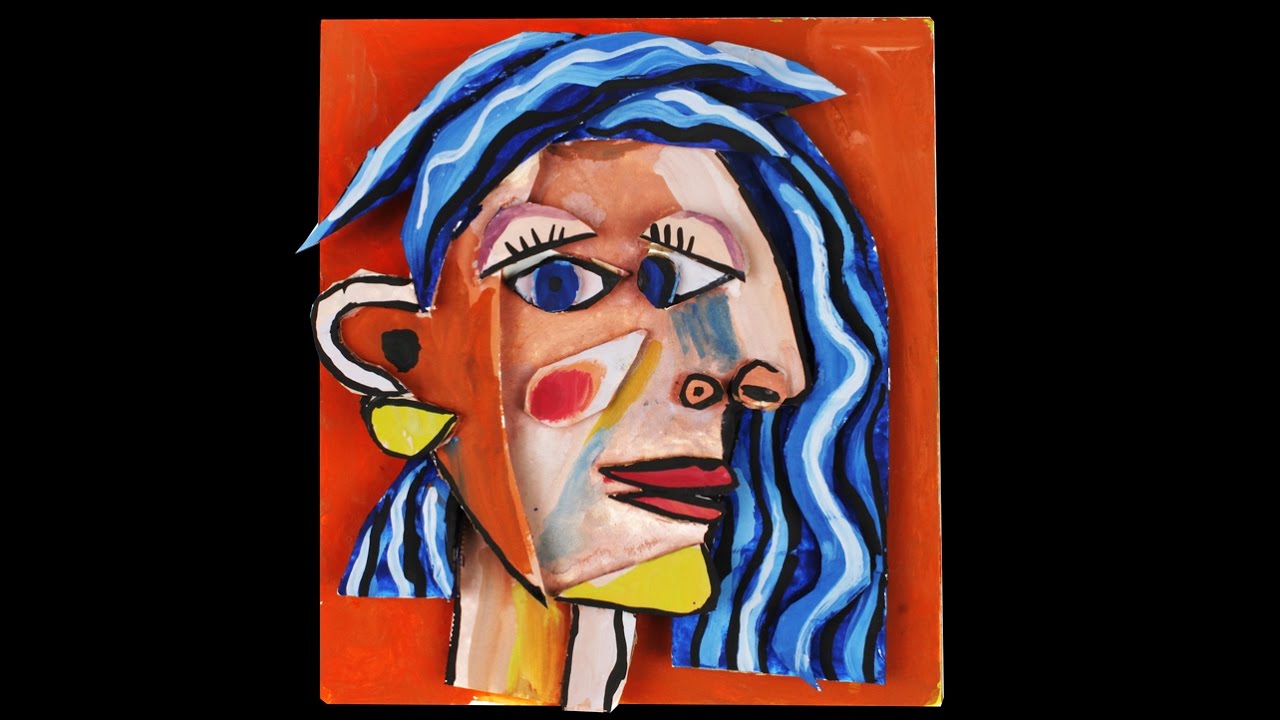

If we think about convolutional layers in a neural network trained for object classification, it becomes clear why this is a very useful property. We typically want the final output to be invariant with respect to translations (within reason – obviously shifting the entire object out of the frame should change the output). However, the first few layers should be equivariant, not invariant. Let’s look at the following image:

Assume that after a few layers, the perceptive field and the complexity of the operations are both large enough to detect features like noses and eyes but not yet large enough to detect faces. Dropping spatial information at this point (e.g. becoming translation invariant) would make it impossible for the network to see that both eyes of the person are located on the same cheek: Picasso’s surrealistic painting would look like a regular face to the network (credit for this analogy goes to Daniel Worrall). Hence there is an interesting interplay between perceptive field size, network depth, invariance and equivariance.

How CNNs enforce equivariance

We’ve stated above that CNNs are equivariant with respect to translation, but now let’s dig into why this is so. It’s worth being a little careful here – the familiar intuitive picture of how CNNs work is most easily framed in terms of motion, but of course nothing here is really in motion. In reality there are many stationary copies of a convolutional filter, each of which is centred over a different pixel. The intuition of motion is very helpful though, so we’ll keep talking in those terms.

Let’s think of a single filter in a convolutional layer. We’ll pass an image through this, and get an image-shaped map of activations. Recalling briefly how a convolutional layer works, we’re going to slide our filter over the input image. The activation at each point in the output is computed when the convolutional filter sits directly above that point in the input image.

Now let’s change our perspective a little. Usually, we think of holding the image fixed while the convolutional filter moves above it. But what if we imagine holding the filter fixed, and moving the image? The two perspectives are visualised in the video below:

(Pixel art from here.)

On the left hand side we have a fixed image and a moving filter. On the right hand side we have a fixed filter and a moving image. Imagining that these are physical objects in motion, we’re looking at the same physical system from two different viewpoints – one viewpoint follows the image, and one follows the filter. This is actually how we created the video – it’s one scene rendered from two different cameras.

But remember that motion isn’t really the right way to think about what’s happening here. In reality, our old perspective had a single image and many translated copies of the filter, and our new perspective has a single filter and many translated copies of the image. We can think about the behaviour of the CNN from either point of view. From the single-filter perspective, here’s how the convolutional layer operates:

- We have a function on images (convolution with our filter).

- We feed many translated copies of our input image to this function.

- For each translation, we store the computed function value.

Now, what happens if we have a new input image, which is just a translated version of our old one? Do we need to recompute anything? Of course not! We’ve already looked at every translated version of our old input image in step 2. The results of the required computations are already there in our output – we just need to look them up. The only problem is that they’re not going to live in exactly the right place. Instead, they’re translated over by the same amount as our new image is. In other words, if we translate the input, we just translate the output by the same amount. This is exactly translation equivariance!

We’ll need to fill in some details here. It’s clear that the original output really does contain all the information we need to construct our new output. We might already have an intuition that the way to recover this information is with a translation in the output space, and it turns out that this intuition is correct, but we’ll need to do some maths to prove it. We’ll come back to this in part 2.

Enforcing equivariance more generally

Now suppose that we have some other group of symmetry transformations, and we want to be equivariant with respect to these. It’s just a case of saying “transform” instead of “translate” in our 3-step process from above:

- We have a function on images (convolution with our filter).

- We feed many transformed copies of our input image to this function.

- For each transformation, we store the computed function value.

Once again, it’s clear that if we see a transformed version of an old image, we don’t need to do any new computation. We’ve already seen the transformed version in step 2, and we just need to look up the relevant outputs. How? By applying the same transformation in the output space! And just like that, we can get equivariance with respect to any group of transformations we like.

Conclusion

This was an introduction to the concept of equivariance. If you don’t want to go deeper into the maths and just wanted to get a broad sense of what this research area is about, feel free to stop here. Otherwise, stick around to read the second part of this two-part series, where we explain how the paradigm from the last section can be put into practice. It should be ready next week. It’s ready! 🙂

Credit: I’m funded by the EPSRC Centre for Doctoral Training in Autonomous Intelligent Machines and Systems. Fabian is funded by the Bosch Center for AI where he is currently undertaking a research sabbatical. A big thank you also to Daniel Worrall for a number of conversations on this topic, and Adam Golinski and Mike Osborne for proofreading and feedback.

-

In theory, at least! In practice the degree of accuracy may be limited by the finite amount of computation we can do. ↩

-

Even more specifically, the main group-theoretic concept at play here is that of an action. We can (and will!) safely ignore the distinction between groups and actions for the purpose of these blog posts, but if you want to dig deeper into the maths in the paper, a basic understanding of group actions would be helpful. ↩

-

There’s a caveat to this: the strict definition does hold for all the intermediate layers, but the first layer of the network looks a bit different, and only has the less strict property. ↩