Permutation Invariant Neural Networks

Publications

On the Limitations of Representing Functions on Sets (ICML 2019)

Edward Wagstaff*, Fabian Fuchs*, Martin Engelcke*, Ingmar Posner, Michael A. Osborne (* indicates equal contribution)

If you’re looking for a more approachable explanation than our ICML paper, Fabian Fuchs, Martin Englecke and I put together a blog post explaining this work, in collaboration with Ferenc Huszár.

About the project

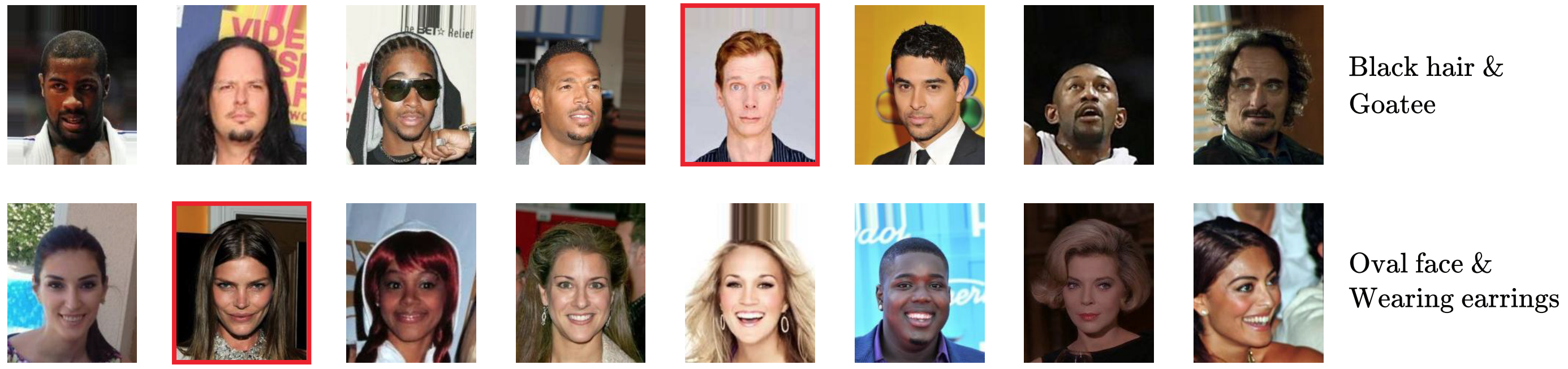

Permutation invariance appears naturally in the context of problems where we wish to view a collection of input data as a set of data points with no intrinsic ordering. This is in contrast to the more usual perspective of viewing data as consisting of vectors/matrices etc., which do carry order information. One example of such a task is anomaly detection. E.g., Lee et al. train a neural net on detecting outliers from a set of images as shown below:

Obviously, in this case, we do not want the model to care about the order in which these images are presented – this property of the model is what we mean when we say permutation invariance.

A prominent permutation-invariant neural network architecture is Deep Sets, introduced in 2017 by Zaheer et al. By design, any function modeled by this architecture is permutation invariant. However, it is not so straight forward to understand the requirements that an instantiation of this architecture must satisfy in order to successfully model a given target function. This is the question addressed in our paper On the Limitations of Representing Functions on Sets.